To-Do List

- code update: to-do list (12/18)

- get ratio figures online (12/18)

- add description to ratio figs (12/18)

- notable progress on 'Results' (12/19)

- finish ModYY description (12/20)

Monday

02.00p-05.30p working on boundary error bug

- no progress

Thursday

06.00a-12.00p working on boundary error bug

- added checkpoints for array sizes

12.00p-01.30p adding bug explanation to website

04.00p-06.00p working on boundary error bug

- boundaries are now communicating, still errors

Friday

08.00a-12.00p working on boundary error bug

- likely just a typo/math error at this point

12.00p-01.30p updating bug explanation on site

03.00p-04.00p code cleanup

04.00p-04.30p adding work log (this) to website

05.00p-06.00p finishing bug writeup

06.00p-07.00p finishing work log

- added auto-formatting, column auto-resizing

08.00p-01.00a finishing code cleanup

- ran into the original mpi_alltoallv issue again

Saturday

10.00a-01.00p more code cleanup

- finished, sent to John

11/16-11/20

Boundary Implementation

Data Sharing Across Processors

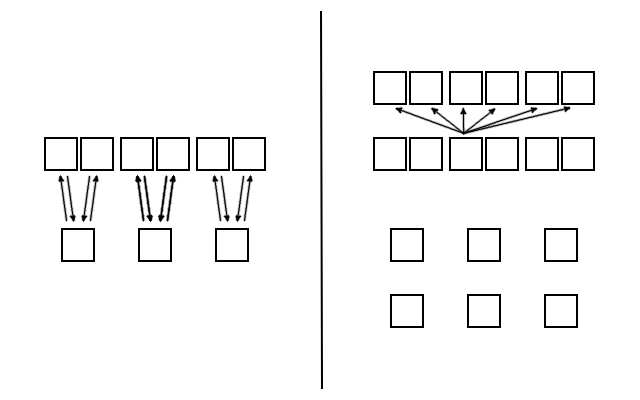

Data sharing is broken into three steps. The "Loading" step, the "Distributing" step, and the "Receiving" step.

To prepare for data sharing, the code first runs a setup routine for each boundary, where it calculates the following numbers

-

nZonesLoad- the number of zones an individual processor owns which are needed for the boundary calculation on the OTHER grid. -

nZonesDist- the number of zones an individual processor will receive from it's paired processor(s), which need to be sent to PEs on the processor's own grid. -

nZonesRecv- the number of zones an individual processor should receive from its distributing processor.

and the following arrays

-

loadCount- -

distCount-

The Loading Step

To set up the loading step, each processor loops through all ghost zones on its opposing grid and records which local zones it owns that are used to calculatie those ghost zones.

The total number of zones are recorded in nZonesLoad, while the

j- and k-values of each zone is recorded in the 1D arrays

jSendLoc and kSendLoc, respectively. The loop over all

ghost zones is set up that data kept organized by (other grid) processor as it is

loaded in to these arrays. i.e.,

_______________________________________________________

| | | | |

| j-vals for | j-vals for | j-vals for | j-vals for |

jSendLoc = | pe(y/z) = 0 | pe(y/z) = 1 | pe(y/z) = 2 | pe(y/z) = n |

| ghost zones | ghost zones | ghost zones | ghost zones |

|_____________|_____________|_____________|_____________|

The structure of the loading loop over all ghost zones is as follows:

nZonesLoadY = 0

loadCount = 0

do k = 1, kmaxYY

myRecvPE = (k-1)/ksYY // pe on OTHER grid

gphi = zzcYY(k)

jjmin = jnminYY(k) - 6

jjmax = jnmaxYY(k) - 6

do j = 1, 12

if (j < 7) then

gthe = zycYY(jjmin) - zdyYY(jjmin)*j

else

gthe = zycYY(jjmax) + zdyYY(jjmax)*(j-6)

end if

// (gthe,gphi) are on OTHER grid - find coords on THIS grid

nthe = acos (sin(gthe) *sin(gphi))

nphi = atan2(cos(gthe),-sin(gthe)*cos(gphi))

n = 2

do while (nthe > zyc(n))

n = n + 1

end do

m = 2

do while (nphi > zzc(m))

m = m + 1

end do

// [... 'loading step' code ...]

end do

end do

where integers n and m correspond to the

j- and k-values of the other grid's ghost zones'

location on the local grid. The following code is used to record these

values.

// [... 'loading step' code ...]

// for each zone [(n-0,m-0), (n-0,m-1), (n-1,m-0), (n-1,m-1)]

// if a PE owns the ghost zone's data, record what it needs to send

// 1. (n-0,m-0)

mySendPE = (m-1)/ks // pe on THIS grid

loadCount(mySendPE,myRecvPE) = loadCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZonesLoadY = nZonesLoadY + 1

jSendLocY(nZonesLoadY) = n-0

kSendLocY(nZonesLoadY) = m-0

end if

// 2. (n-0,m-1)

mySendPE = (m-2)/ks

loadCount(mySendPE,myRecvPE) = loadCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZonesLoadY = nZonesLoadY + 1

jSendLocY(nZonesLoadY) = n-0

kSendLocY(nZonesLoadY) = m-1

end if

// 3. (n-1,m-0)

mySendPE = (m-1)/ks

loadCount(mySendPE,myRecvPE) = loadCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZonesLoadY = nZonesLoadY + 1

jSendLocY(nZonesLoadY) = n-1

kSendLocY(nZonesLoadY) = m-0

end if

// 4. (n-1,m-1)

mySendPE = (m-2)/ks

loadCount(mySendPE,myRecvPE) = loadCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZonesLoadY = nZonesLoadY + 1

jSendLocY(nZonesLoadY) = n-1

kSendLocY(nZonesLoadY) = m-1

end if

After all ghost zones have been looped over, we also have the array

loadCount which is only used to check values are correct after

setup for the distributing step has completed. An example array from the Yin

grid using npey=6 and npez=8 (yin:

pey=4, pez=8; yang: pey=2, pez=8)

yin | 32 34 36 38 40 42 44 46 |

--------------------------------------------------------------

0 | 0 0 0 17 17 0 0 0 | 34

4 | 0 0 54 43 43 54 0 0 | 194

8 | 41 60 6 0 0 6 60 41 | 214

12 | 19 0 0 0 0 0 0 19 | 38

16 | 17 0 0 0 0 0 0 17 | 34

20 | 43 54 0 0 0 0 54 43 | 194

24 | 0 6 60 41 41 60 6 0 | 214

28 | 0 0 0 19 19 0 0 0 | 38

--------------------------------------------------------------

| 120 120 120 120 120 120 120 120 | 960

yang | 0 4 8 12 16 20 24 28 |

--------------------------------------------------------------

32 | 0 0 15 115 115 15 0 0 | 260

34 | 0 0 99 5 5 99 0 0 | 208

36 | 1 96 6 0 0 6 96 1 | 206

38 | 119 24 0 0 0 0 24 119 | 286

40 | 115 15 0 0 0 0 15 115 | 260

42 | 5 99 0 0 0 0 99 5 | 208

44 | 0 6 96 1 1 96 6 0 | 206

46 | 0 0 24 119 119 24 0 0 | 286

--------------------------------------------------------------

| 240 240 240 240 240 240 240 240 | 1920

The first column lists each PE on jcol=0 which will

be loading data. The middle section lists how many zones are intended

for each PE on the Yang grid. The final column lists total zones

to be sent for each PE.

The Distributing Step

Setup for the distributing step is structured the same as that of the loading step.

However, each processor now loops through all ghost zones on its own grid and determines

which PEs on the other grid own data for those ghost zones. If the PE which owns that

zone is (one of) the processor's paired PE(s), the processor then records the zone and

the j- and k-values of the ghost zone it is destined for. These

values are recorded 1D arrays structured the same as jSendLoc and

kSendLoc. This structuring is important as it is required to share data

efficiently between the two processors.

nZonesDistY = 0

distCount = 0

do k = 1, kmax

myRecvPE = (k-1)/ksort // pe on THIS grid

nphi = zzc(k)

jjmin = jnmin(k) - 6

jjmax = jnmax(k) - 6

do j = 1, 12

if (j < 7) then

nthe = zycYY(jjmin) - zdyYY(jjmin)*j

else

nthe = zycYY(jjmax) + zdyYY(jjmax)*(j-6)

end if

// (nthe,nphi) are on THIS grid - find coords on OTHER grid

gthe = acos (sin(nthe) *sin(nphi))

gphi = atan2(cos(nthe),-sin(nthe)*cos(nphi))

n = 2

do while (nthe > zyc(n))

n = n + 1

end do

m = 2

do while (nphi > zzc(m))

m = m + 1

end do

// [... 'distributing step' code ...]

end do

end do

where the distributing step code is

// [... 'distributing step' code ...]

// for each zone [(n-0,m-0), (n-0,m-1), (n-1,m-0), (n-1,m-1)]

// if a PE will receive data from it's paired processor, record

// where it needs to send that data

// 1. (n-0,m-0)

mySendPE = (m-1)/ksYY // pe on OTHER grid (== pe on THIS grid)

distCount(mySendPE,myRecvPE) = distCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZoneDistY = nZoneDistY + 1

nRecvLocYbuff(nZoneDistY) = 1

jRecvLocYbuff(nZoneDistY) = j

kRecvLocYbuff(nZoneDistY) = k

end if

// 2. (n-0,m-1)

mySendPE = (m-2)/ksYY

distCount(mySendPE,myRecvPE) = distCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZoneDistY = nZoneDistY + 1

nRecvLocYbuff(nZoneDistY) = 2

jRecvLocYbuff(nZoneDistY) = j

kRecvLocYbuff(nZoneDistY) = k

end if

// 3. (n-1,m-0)

mySendPE = (m-1)/ksYY

distCount(mySendPE,myRecvPE) = distCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZoneDistY = nZoneDistY + 1

nRecvLocYbuff(nZoneDistY) = 3

jRecvLocYbuff(nZoneDistY) = j

kRecvLocYbuff(nZoneDistY) = k

end if

// 4. (n-1,m-1)

mySendPE = (m-2)/ksYY

distCount(mySendPE,myRecvPE) = distCount(mySendPE,myRecvPE) + 1

if (mySendPE == mypez) then

nZoneDistY = nZoneDistY + 1

nRecvLocYbuff(nZoneDistY) = 4

jRecvLocYbuff(nZoneDistY) = j

kRecvLocYbuff(nZoneDistY) = k

end if

Once all ghost zones have been looped over, we now have all data organized.

The final setup step is to distribute these arrays to each processor using the

same method that will be used to distribute actual boundary data (so that each

processor knows what order it will receive boundary data). To determine how the

data is sent, we use the distCount array. An example for the same

processor configuration as above gives

yin | 0 4 8 12 16 20 24 28 |

--------------------------------------------------------------

0 | 0 0 15 115 115 15 0 0 | 260

4 | 0 0 99 5 5 99 0 0 | 208

8 | 1 96 6 0 0 6 96 1 | 206

12 | 119 24 0 0 0 0 24 119 | 286

16 | 115 15 0 0 0 0 15 115 | 260

20 | 5 99 0 0 0 0 99 5 | 208

24 | 0 6 96 1 1 96 6 0 | 206

28 | 0 0 24 119 119 24 0 0 | 286

--------------------------------------------------------------

| 240 240 240 240 240 240 240 240 | 1920

yang | 32 34 36 38 40 42 44 46 |

--------------------------------------------------------------

32 | 0 0 0 17 17 0 0 0 | 34

34 | 0 0 54 43 43 54 0 0 | 194

36 | 41 60 6 0 0 6 60 41 | 214

38 | 19 0 0 0 0 0 0 19 | 38

40 | 17 0 0 0 0 0 0 17 | 34

42 | 43 54 0 0 0 0 54 43 | 194

44 | 0 6 60 41 41 60 6 0 | 214

46 | 0 0 0 19 19 0 0 0 | 38

--------------------------------------------------------------

| 120 120 120 120 120 120 120 120 | 960

Note that these arrays are identical to those produced in the 'Loading' step, other than they are displayed by the opposite grids.

Code Errors (outdated)

mpirun -np 48 --bind-to-core ./vh1-inner

STEP = 0

dt = 0.000024

time = 0.000000

[tycho.physics.ncsu.edu:20459] *** An error occurred in MPI_Recv

[tycho.physics.ncsu.edu:20459] *** on communicator MPI_COMM_WORLD

[tycho.physics.ncsu.edu:20459] *** MPI_ERR_TRUNCATE: message truncated

[tycho.physics.ncsu.edu:20459] *** MPI_ERRORS_ARE_FATAL: your MPI job will now abort

mpi_alltoallv call on the final two processors.

<type> :: sendBuffer(*), recvBuffer(*)

integer :: sendCount(*), sendOffset(*)

integer :: recvCount(*), recvOffset(*)

call mpi_alltoallv(

sendBuffer,

sendCount,

sendOffset,

mpi_integer,

recvBuffer,

recvCount,

recvOffset,

mpi_integer,

mpi_communicator,

mpierr

)

Send and Receive - Sizes

pe | mypey mypez

--------------------------------------------------------------------------------

46 | 0 7

alltoallv : sendCount, sendOffset -- recvCount, recvOffset

pe | 0 1 2 3 4 5 6 7

--------------------------------------------------------------------------------

32 | 0 0 0 72 72 0 0 0 | 144

32 | 0 0 0 0 72 144 144 144 |

--------------------------------------------------------------------------------

32 | 1 2 3 4 1 2 3 4 | 20

32 | 0 0 0 168 240 312 480 480 |

================================================================================

pe | mypey mypez

--------------------------------------------------------------------------------

47 | 1 7

alltoallv : sendCount, sendOffset -- recvCount, recvOffset

pe | 0 1 2 3 4 5 6 7

--------------------------------------------------------------------------------

32 | 0 0 0 72 72 0 0 0 | 144

32 | 0 0 0 0 72 144 144 144 |

--------------------------------------------------------------------------------

32 | 1 2 3 4 1 2 3 4 | 20

32 | 0 0 0 168 240 312 480 480 |

================================================================================

Per Processor Breakdown

swapCountY from loading step (viewed as OTHER grid):

| 0 1 2 3 4 5 6 7 |

---------------------------------------------------------------------------------

0 | 0 0 0 72 72 0 0 0 | 144

1 | 0 12 228 168 168 228 12 0 | 816

2 | 168 228 12 0 0 12 228 168 | 816

3 | 72 0 0 0 0 0 0 72 | 144

4 | 72 0 0 0 0 0 0 72 | 144

5 | 168 228 12 0 0 12 228 168 | 816

6 | 0 12 228 168 168 228 12 0 | 816

7 | 0 0 0 72 72 0 0 0 | 144

---------------------------------------------------------------------------------

| 480 480 480 480 480 480 480 480 | 3840

swapCountY from distributing step (viewed as THIS grid):

| 0 1 2 3 4 5 6 7 |

---------------------------------------------------------------------------------

0 | 0 0 78 468 468 78 0 0 | 1092

1 | 0 12 390 12 12 390 12 0 | 828

2 | 12 390 12 0 0 12 390 12 | 828

3 | 468 78 0 0 0 0 78 468 | 1092

4 | 468 78 0 0 0 0 78 468 | 1092

5 | 12 390 12 0 0 12 390 12 | 828

6 | 0 12 390 12 12 390 12 0 | 828

7 | 0 0 78 468 468 78 0 0 | 1092

---------------------------------------------------------------------------------

| 960 960 960 960 960 960 960 960 | 7680